Parallelizing Workloads with Slurm Job Arrays

Do you have a script that needs to be run across many samples? Or maybe you need to parameter test a script with a variety of values? This blog post covers how to achieve this simply and efficiently with Slurm job arrays.

Let's say you have a simulation that you need to run many times, with a different set of parameters each run. Or you have a workload that you have tested successfully on a single sample, and now you need to apply it to 100 samples. In neuroimaging, you may run 100 brains through the same workflow. There may be many different situations that require a single script to be run several times with one or more variables (e.g. an input file, or a desired parameter) adjusted each time. For situations like these, your first instinct may be to submit a separate Slurm script for each iteration, subbing in the required variable/s each time. However, there is a much easier way to achieve this, and that's with Slurm Job Arrays. This blog post will describe how you can use Slurm Job Arrays to run all of those samples or desired parameters in parallel with a single Slurm script.

Step 1: Test your workload

Before running a job array, it's always helpful to test your Slurm script first on a single variable or parameter to ensure that any obvious issues are detected. Whether it's specifying an incorrect path, forgetting to load a software package, or underestimating the required resources for the job - we've all been there, don't worry! Once your script has run successfully it's more likely that following runs will follow suit, meaning less troubleshooting for your job array.

Take a look at our simple Slurm guide for beginners.

Step 2: Set the job array Slurm directive

To tell Slurm that you wish to run the same script a specified number of times, you must add the job array directive --array at the top of your script with your other Slurm directives. For example, if you had 10 samples you wanted to run your script across, #SBATCH --array=1-10 will tell Slurm to run the script 10 times a.k.a create 10 independent array tasks numbered from 1 to 10.

Any Slurm directives that you specify for resources at the top of your script e.g.

--cpus-per-task or --nodes or --mem etc will be applied to each array task i.e. you only need to specify the resources required for a single sample/task, not the entire array. By default, the maximum array size you can specify is 1001. If you require an array larger than this, you will need to set a new value for

MaxArraySize in the slurm config file at: /opt/slurm/etc/slurm.conf.

Step 3: Understand the $SLURM_ARRAY_TASK_ID variable

When working with Slurm job arrays, a special Slurm variable comes into play. This variable is the $SLURM_ARRAY_TASK_ID variable. Slurm will assign a $SLURM_ARRAY_TASK_ID variable to each array task based on the --array SLURM directive you specify at the top of your script. Using the example above of #SBATCH --array=1-10, the $SLURM_ARRAY_TASK_ID variable for the first array task will be equal to 1, the $SLURM_ARRAY_TASK_ID variable for the second array task will be equal to 2, for the third array task $SLURM_ARRAY_TASK_ID=3, and so on up to 10.

In the most basic instance, you can use the $SLURM_ARRAY_TASK_ID variable directly in your script to set up your job array. For example, if you had an input file for each sample like the following: sample1.fa, sample2.fa, sample3.fa ... sample10.fa, to have each of your 10 Slurm array tasks handle a separate sample file, all you would need to do is replace the line where you specify the sample filename with sample${SLURM_ARRAY_TASK_ID}.fa. This means for array task 1, the script will run sample1.fa, array task 2 will run sample2.fa and so on.

But what if your files aren't named this way, or what if you needed to change multiple different values for each array task? That's where Step 4 comes in.

$SLURM_ARRAY_TASK_ID variable to Python or R ScriptsIf your Slurm script runs a custom Python or R script, you can read the

$SLURM_ARRAY_TASK_ID in like you would any other environmental variable. For example, using sys.getenv("SLURM_ARRAY_TASK_ID").

Step 4: Create a config file for your array tasks

One of the best ways to deal with more complex configurations of job arrays is to create a simple config file that specifies the variables or parameters you would like to input for each array task. For example, if you would like to substitute in a sample name and a given parameter (in this case the sex of the sample) for each array task, you could create a simple config text file like the following:

ArrayTaskID SampleName Sex

1 Bobby M

2 Ben M

3 Amelia F

4 George M

5 Arthur M

6 Betty F

7 Julia F

8 Fred M

9 Steve M

10 Emily FYou can then use the $SLURM_ARRAY_TASK_ID variable and the first column to retrieve the required sample name and sex for each array task in your script using awk as follows:

#!/bin/bash

#SBATCH --job-name=myarrayjob

#SBATCH --ntasks=1

#SBATCH --cpus-per-task=1

#SBATCH --array=1-10

# Specify the path to the config file

config=/path/to/config.txt

# Extract the sample name for the current $SLURM_ARRAY_TASK_ID

sample=$(awk -v ArrayTaskID=$SLURM_ARRAY_TASK_ID '$1==ArrayTaskID {print $2}' $config)

# Extract the sex for the current $SLURM_ARRAY_TASK_ID

sex=$(awk -v ArrayTaskID=$SLURM_ARRAY_TASK_ID '$1==ArrayTaskID {print $3}' $config)

# Print to a file a message that includes the current $SLURM_ARRAY_TASK_ID, the same name, and the sex of the sample

echo "This is array task ${SLURM_ARRAY_TASK_ID}, the sample name is ${sample} and the sex is ${sex}." >> output.txtawk command?For a general introduction to

awk see this blog post. The first awk command in the script above is setting the current $SLURM_ARRAY_TASK_ID as an awk variable called "ArrayTaskID" i.e. -v ArrayTaskID=$SLURM_ARRAY_TASK_ID, it is then looking up the row in the config file where the first column is equal to that "ArrayTaskID" i.e. $1==ArrayTaskID, and finally it is printing the value from the second column in that row i.e. {print $2} which corresponds to the sample name. The second awk command in the script above is similar, but it prints the value from the third column in the row i.e. {print $3}, to extract the sex.

In this example, Slurm will create 10 array tasks, each requesting 1 CPU, because each task will inherit the resource directives specified at the top of the script. The output.txt file will look something like the following:

This is array task 1, the sample name is Bobby and the sex is M.

This is array task 2, the sample name is Ben and the sex is M.

This is array task 3, the sample name is Amelia and the sex is F.

This is array task 4, the sample name is George and the sex is M.

This is array task 5, the sample name is Arthur and the sex is M.

This is array task 6, the sample name is Betty and the sex is F.

This is array task 7, the sample name is Julia and the sex is F.

This is array task 8, the sample name is Fred and the sex is M.

This is array task 9, the sample name is Steve and the sex is M.

This is array task 10, the sample name is Emily and the sex is F.Note that in actuality the order of the tasks may be different since all 10 array tasks could be run simultaneously as long as there were 10 CPUs available on the cluster.

Step 5: Submit and monitor your job

Your array job can be submitted with sbatch as usual. You will still retrieve a main job ID upon submission which is the ID for the entire job array:

Submitted batch job 209

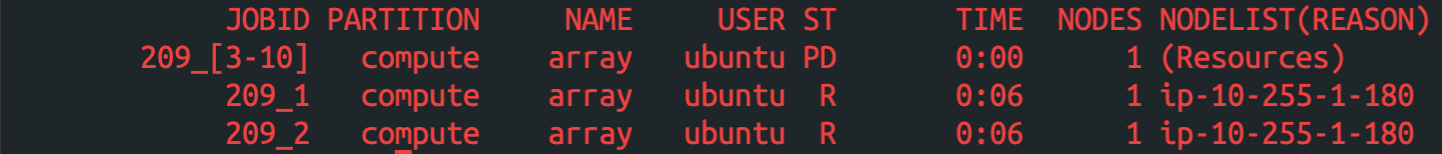

Slurm will then create an ID for each array task by appending the array task ID to the main job ID. In the example below you will notice that two of the array tasks are running 209_1 and 209_2, while the remaining 8 array tasks 209_[3-10] are pending while waiting for resources to become available:

You can cancel a particular array task using the respective JOBID in the first column, e.g. scancel 209_2, or you can cancel all array tasks in the array job by just specifying the main job ID, e.g. scancel 209.

Slurm will also generate a separate output file for each array task. By default it will be named slurm-%A_%a.out where %A is the main job ID and %a is the array task ID e.g. slurm-209_1.out. This can be changed with the --output directive if required.

Other Job Array Options

Limiting the number of tasks run at once

With array jobs, the scheduler on your cluster will take care of running each array task once the specified resources become available. This means that if there are enough resources available on your cluster, all of your array tasks will run simultaneously. If you would like to limit the number of tasks run at once, you can append the %N parameter to the --array Slurm directive (where N equals the number of tasks to run at once). For example, to specify that you would like to run 100 array tasks, but only run 5 at once, your --array directive would be:

#SBATCH --array=1-100%5

Specifying particular array tasks to run

The Slurm --array directive does not always have to be a given range from 1-N where N is the number of jobs you wish to run. Instead it can be a comma separated list of numbers, or even a range with a given step size. This can be handy if you have a simple list of values you want to use for your array tasks, or you only want to run particular subset of array tasks.

For instance, let's say you need to parameter test a particular script and the parameter values you want to test are 3, 5 and 7. Instead of specifying --array=1-3 and then creating a config file to substitute in the required parameter values for each task, you can instead specify --array=3,5,7 which negates the need for a config file. Similarly, if the parameter values you want to test are 0,5,10,15,20,25,30 you could specify --array=0-30:5, which specifies the range 0 to 30 with a step size of 5.

Alternatively, you may have run a complete range of array tasks once, and realized that some of the tasks failed and so you wish to run those particular tasks again. For example, if you ran the example job array we set up in the steps above and noticed that the runs for samples Betty and Fred did not work, to run these samples again all you would need to do is change the --array directive to corresponding array task IDs from the config file i.e. --array=6,8.

Conclusion

Array jobs in Slurm are a simple and efficient way to submit and manage groups of similar tasks on your cluster. They allow you to easily scale up from a single sample to hundreds of samples, or to run multiple iterations of a script with varying parameters. And the best part? Well, there's nothing more satisfying than watching your job array multiply and conquer all those repetitive tasks for you!